MYRTUS Design and Programming Environment

MYRTUS Pillar 3

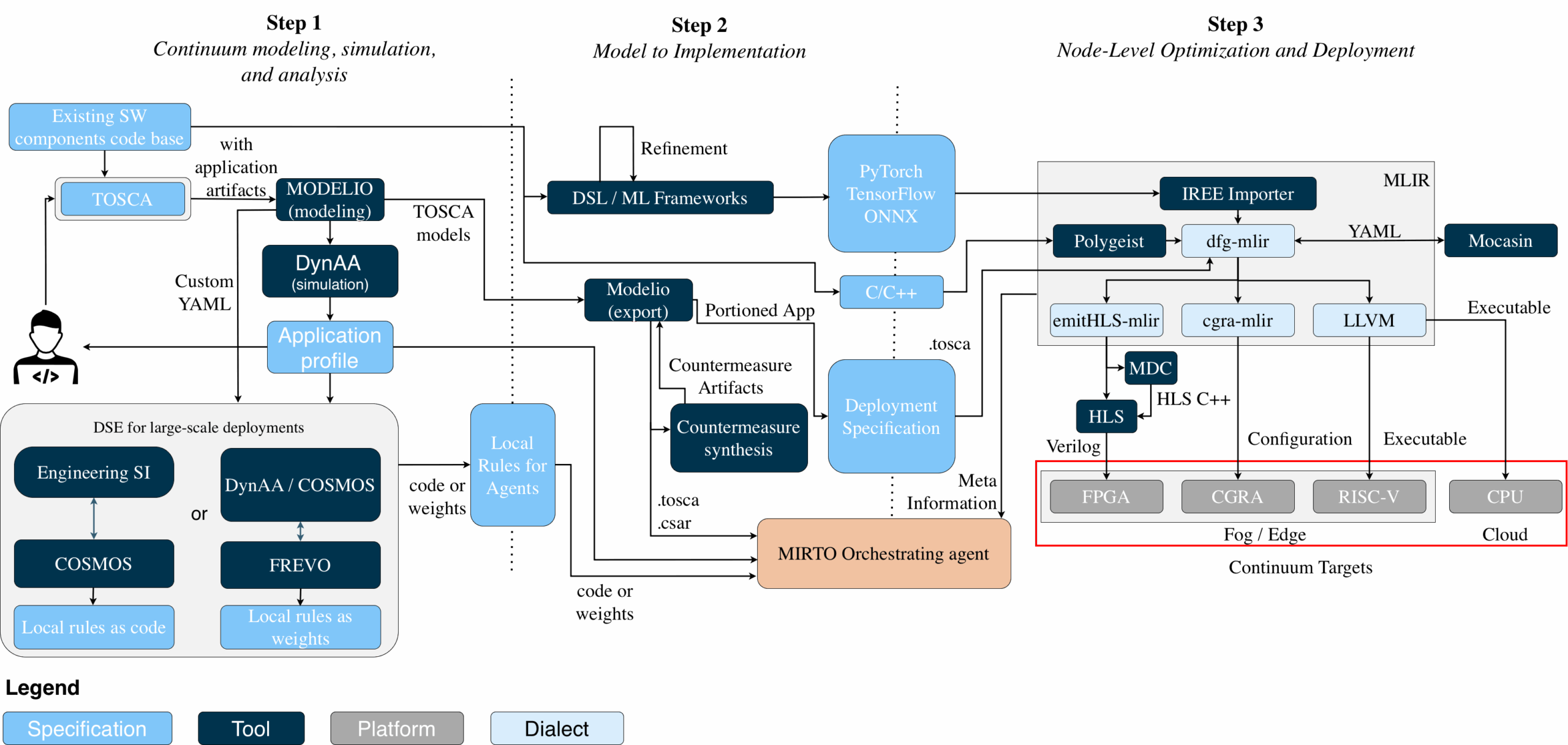

To facilitate the development of such systems, MYRTUS provides a reference design and programming environment (DPE) for continuum computing systems, featuring interoperable support for cross-layer modelling, threat analysis, design space exploration, application modelling, components synthesis, and code generation. To favour solutions uptake and overcome interoperability issues, we will leverage standard and widely acknowledged technologies as OASIS Topology and Orchestration Specification for Cloud Applications (TOSCA) to enhance the portability and operational management of distributed applications and services across the continuum and for their entire lifecycle and the Multi-Level Intermediate Representation (MLIR) that is used to build reusable and extensible compiler infrastructures to share information among the tools at the node level.

Figure 1. MYRTUS Design and Programming Environment

The MYRTUS DPE is responsible for creating the deployment specification for the continuum, including all the executables and configuration files to program the heterogeneous components. Moreover, it exports meta-information with nonfunctional properties of the applications to aid the MIRTO Cognitive Engine in runtime decision-making. As shown in Figure 1, the DPE is composed of three steps: 1) a step for high-level modeling, simulation, and analysis; 2) a step for turning the model into a concrete implementation; and 3) a step on node-level optimization and deployment of key computational kernels of the application.

Step 1 – Continuum modeling, simulation and analysis

This step extensively leverages Modelio to:

- i) model the functional partitioning of the overall scenario using the OASIS Tosca standard;

- ii) allow the user to model the Attack-Defence Tree Designer (ATD) for the analysis of the threats to which the system is exposed and synthesize a set of adapted counter-measures;

- iii) provide functional-level requirements, such as the expected end-to-end latency and fault conditions, leveraging its internal model-based KPIs estimation capabilities.

A major Modelio extension under development, TOSCA Designer will allow users to automatically export the Cloud Service Archive (.csar) package, which will contain relevant TOSCA templates, scripts and files to allow workload deployment and management in all TOSCA-compatible environments, including Kubernetes-based. This extension, TOSCA Designer, leverages on the lessons learned with Modelio CAMEL Designer (see: MORPHEMIC project) to specify multiple aspects/domains related to multi-/cross-cloud applications.

Step 2- Model to Implementation

Going from the modelling level to deployment implies defining the Program Code. Modelio can extract parts of the applications (Portioned App) that require acceleration (e.g., DSP kernels) and can be used directly to synthesize code. Predefined interoperability mechanisms guarantee that implementations can be derived also from external Domain Specific Languages (DSLs) and/or ML frameworks or taken from existing hand-optimized C/C++ components.

The Program Code is then passed to Step 3 for compilation, optimization, and, in the case of FPGA-based computing components, also for accelerator synthesis. This step also connects the DPE to the MIRTO Cognitive Engine. First, the component-level view of the application is fed to the MIRTO Cognitive Engine completing the deployment specification model with all the needed .tosca/.csar files. Second, Modelio is used to synthesize the swarm agents to be included in the MIRTO Manager of the Cognitive Engine from the local rules and Threat Counter Measures Snippets to mitigate the security threats associated with the ATD.

Catalogue of tools involved in the Step 2

Step 3 – Node Level Optimisation and Deployment

This step results in the executables and bitstreams for running and/or configuring the different computing components. A common interoperability framework based on MLIR, built atop the MLIR infrastructure of the EVEREST project, is adopted to allow i) importing third-party codes (from DSLs or ML models), ii) having access to third-party tools (like polyhedral compilers for optimization purposes), and iii) compiling code for different targets (as reconfigurable accelerators, CPUs, or customizable RISC-V cores).

Mocasin, a high-level Python-based DSE tool for heterogeneous many-cores, will be extended to support Coarse-Grain Reconfigurable Architectures (CGRAs), while dfg-mlir and cgra-mlir dialects will be used to model applications as dataflows and generate CGRA configurations, respectively. Numerical kernels can be described with an extension of the teil dialect, with a NumPy-like front end, with support for custom data types using the base2 dialect. Existing dialects/tools will be leveraged from the MLIR ecosystem for accepting inputs in different languages (i.e., torch-MLIR and Polygeist) and target i) FPGA, producing Verilog (through High-Level Synthesis (HLS)) to feed MDC for the generation of runtime reconfigurable accelerators, and ii) CPU/GPU, through the LLVM Intermediate Representation.

For the HLS step, CIRCT-hls, already used by dfg-mlir, and Vitis-HLS, already supported by MDC, are currently under consideration. The deployment specification will be passed from Modelio to dfg-mlir in TOSCA format (i.e., YAML). The rest of the application is compiled with standard compilers, ensuring it can interoperate with the accelerated portions.

Catalogue of tools involved in the Step 3